This blog explores the different paradigms evaluators may have and questions how these paradigms interplay with evaluators’ backgrounds and influence their practices.

People are often worried about the “right way” of doing things. As an evaluator, you may have come across other evaluators and thought “their way of evaluating is just like mine” or, indeed, the opposite, “I wouldn’t have approached the subject or data collection in that way”. But what if there is no one good way, but several, shaped by your personal worldview?

The notion of evaluator paradigms

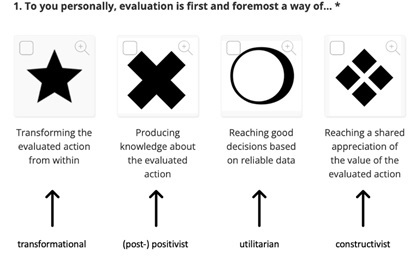

This is what Egon Guba and Yvonna Lincoln [1] suggested in the late 1980s with the notion of “evaluator paradigms”. They used the term not so much in the sense of scientific paradigms, but rather to refer to different sets of beliefs that evaluators had in common. In the words of Donna Mertens and Amy Wilson, evaluator paradigms are “broad metaphysical constructs that include sets of logically related philosophical assumptions […] Your philosophical assumptions, of which you may not yet be aware, will determine which of the [different] paradigms you work in most comfortably as an evaluator.”[2] They identified four paradigms: transformational, (post-)positivist, utilitarian and constructivist.

The whole concept of paradigms sounded very attractive. But then, we asked ourselves: to what extent do they apply in practice? Do evaluators recognize themselves in these paradigms and, if so, how common are the various types? Can we trace them back to evaluators’ backgrounds? To answer these questions, we developed a simple quiz, which listed eight questions based on everyday professional situations. Every question had four possible answers, each reflecting one of the four paradigms (yet not explicitly so). Respondents could choose one or two answers to each question. A separate questionnaire collected information on the respondents’ countries of origin, education and experience. After a testing phase, the quiz was disseminated through Voluntary Organizations for Professional Evaluation (VOPEs) in Europe, Canada and French-speaking Africa.

Figure 1: The first question of the quiz and the corresponding paradigms

From the survey’s launch in June 2019, more than 800 evaluators from almost 30 countries responded to the survey.

This is what we found

First, respondents recognized themselves in the “daily-life situations” presented in the quiz, as well as in the four paradigms. The “transformational” paradigm was the only one that raised some questions, based on initial testing and comments in the survey.

Second, the constructivist paradigm was the most common one in our sample, with 44 percent of respondents ranking it first. The second most common was the utilitarian paradigm (32 percent), followed by the positivist (21 percent) and transformative paradigms (4 percent). This order varied slightly from country to country. For instance, a majority of respondents in Switzerland and Germany ranked utilitarian first, while none put the transformational paradigm first. You may have already answered the EvalForward version of this quiz, which collected over 500 answers. Here, too, the constructivist profile ranked first, followed by the utilitarian profile.

It was possible to choose one or two answers to each question and respondents often availed of that possibility. This ties in with the third thing we learned from the survey: when faced with specific situations, respondents were more likely to combine paradigms. While 35 percent of respondents chose a single answer to each question, 65 percent chose to give two answers at least once. Only 7 percent chose a single paradigm for all questions. We also noticed that almost half of respondents chose a transformative answer at least once.

Forth, we wondered whether there were any determining factors that prompted respondents to select a given paradigm. For example, does experience influence the outlook one has of one’s work as an evaluator? Based on our initial multivariate analysis, variables such as gender, age, career level, number of evaluations conducted, educational level, academic background and work sector seem to have a limited influence on the average number of responses collected for each paradigm.

Still, it appears that the academic background and the work sector in which respondents have gained significant experience influence how likely they are to pick the transformative and positivist paradigms (the two less frequent paradigms). For instance, economists tend to cumulate more positivist paradigm responses, while respondents with significant experience in evaluating employment-related policies are more likely to pick the transformational and utilitarian paradigms.

It seems most likely, though, that paradigm preferences are influenced by broader aspects, such as our culture or our past. For instance, country differences hint at different national evaluation cultures that might play a role in these results. As Mertens and Wilson underlined, “evaluators do not seem to leave their past behind”[3].

In the end, this research also questions the notion of paradigms themselves. Seasoned evaluators taking the quiz noted to their ability to agree with all prompts depending on context. But they also said they felt easier about some of them, suggesting the idea of a “comfort zone”. This zone might be expanded somewhat through the accumulation of experience and reflexivity, with some paradigms still staying outside it (for example, “I’m comfortable with being a mediator, or a scientist or a pragmatist, but I really feel uneasy with the transformational paradigm”). Certainly, a topic for further study!

Research limitations

It should be noted that results are not representative of the population of evaluators in each country. Answers were provided on a voluntary basis by those who had access to the survey. Furthermore, gaining a representative sample is probably not feasible, as little is known about the exact population of evaluators in most countries (such as who identifies as such, based on which criteria).

We would also like to point out that:

- • The quiz was designed to be simple and help people understand their own inclinations. For instance, paradigms were always presented in the same order to avoid a bias in which the first information listed was considered more important and to facilitate self-assessment.

- • There were no specific tests of the validity of the measurement scale, although the survey was tested by multiple evaluators from different countries and professional backgrounds prior to dissemination.