Silva Ferretti is a freelance consultant with extensive international experience in both development and humanitarian work. She has been working with diverse organizations, committees, networks and consortia (e.g. Agire, ActionAid, CDAC, DEC, ECB project, Handicap International, HAP, Plan International, Save the Children, SPHERE, Unicef, WorldVision amongst many others).

Her work is mainly focused on looking at the quality of programs and on improving their accountability and responsiveness to the needs, capacities and aspirations of the affected populations.

Her work has included impact evaluations / documentation of programs; set up of toolkits, methodologies, standards, frameworks and guidelines; coaching, training and facilitation; field research and assessments.

Within all her work Silva emphasizes participatory approaches and learning. She has a solid academic background, and also collaborated with academic and research institutions in short workshops on a broad range of topics (including: innovations in impact evaluation, Disaster Risk Management, participatory methodologies, protection, communication with affected populations).

She emphasizes innovation in her work, such as the use of visuals and videos in gathering and presenting information.

Silva Ferretti

Freelance consultantHello Yosi, thanks for this very important question!

I am collecting some tips on including environmental issues in evaluation. This is one of them. Hopefully, I will share more.

See, a "thinking environment" is a mindset.

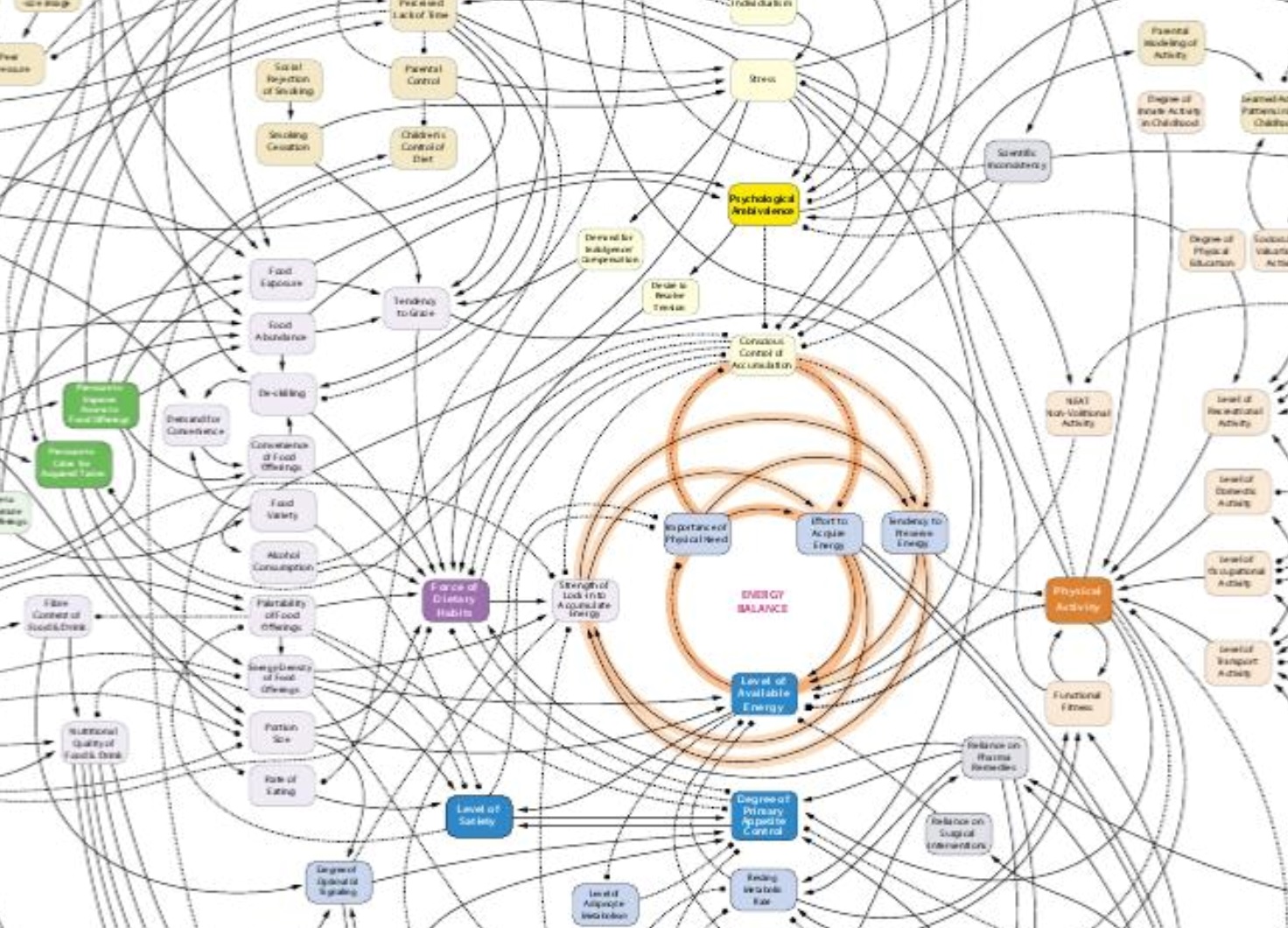

The moment we take a more ecosystemic perspective, we will immediately realize the limitations of our approaches.

But we also discover that simple things - as an extra question - can go a long way. :-)